Amazon AWS CloudFormation Template

In order to provide a redundant proxy configuration, the following guide can be followed.

- Deploy a Heimdall Central Manager via the AWS Marketplace. This instance doesn't need to be particularly large, many customers use a t3.medium;

- Ensure the application works appropriately through a proxy instance hosted on the management server;

- Once the application is validated, you can un-check the proxy option "Local proxy" which will terminate the proxy on this management server;

- To fully dedicate the memory of the management server to management activities, follow the heimdall.conf instructions here;

- Deploy a cluster of proxy-only nodes using the template located at: Template.

Note: Support for this template is exclusively provided for Heimdall Enterprise Edition customers as customers that require this level of redundancy also typically require 24/7 support. Heimdall offers private offer pricing to customers on a negotiated basis if needed. The template is designed to pull the newest Enterprise (ARM) image in any region automatically. If a custom AMI ID is desired, please create a SSM parameter, and provide the AMI ID desired as the value of the parameter. Use the parameter name as the AMI Alias in the CF template, and on deployment, it will resolve the AMI ID per the SSM parameter.

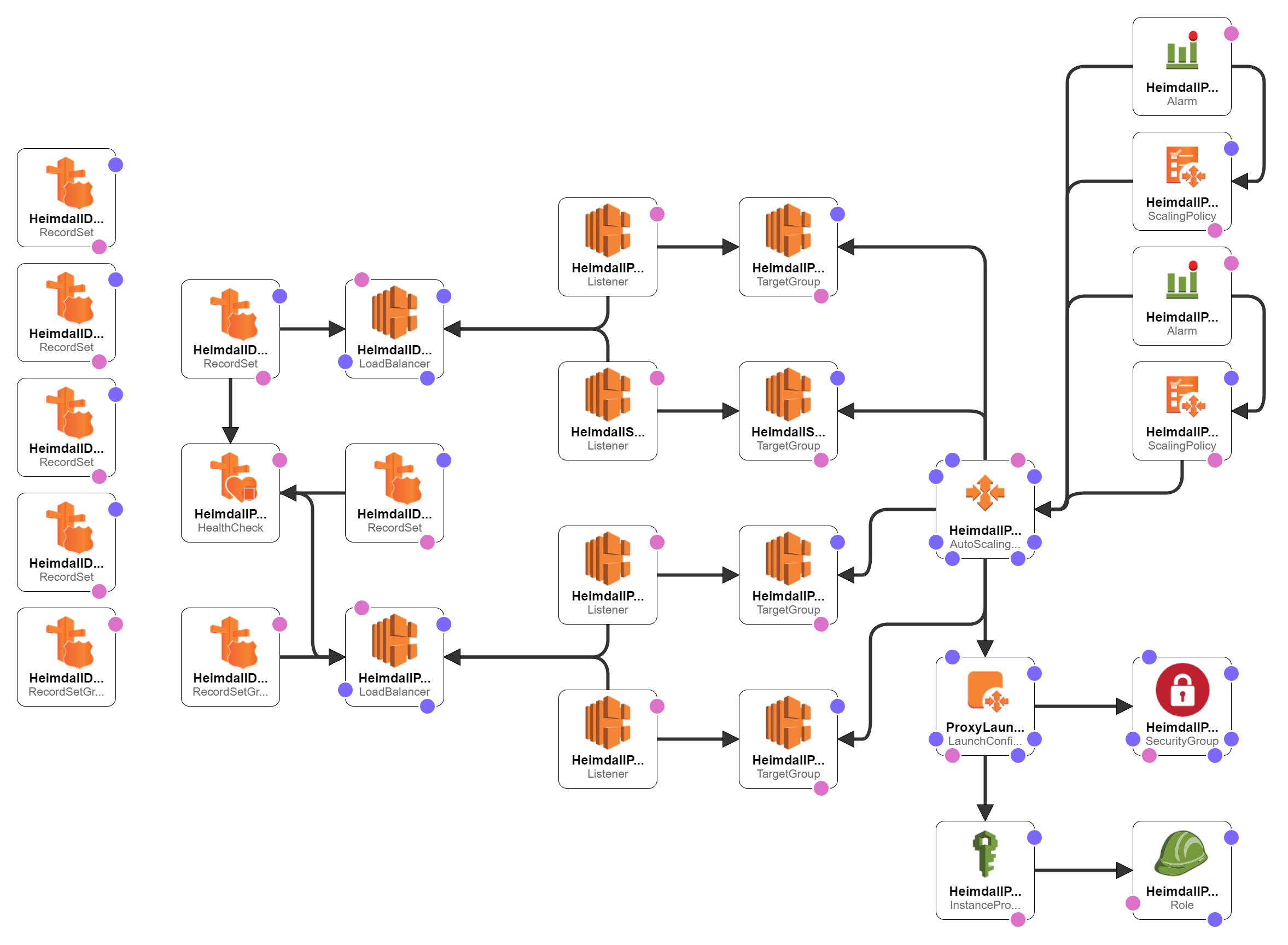

The resources created by this template are:

- One or Two Network Load Balancers with Listener billable Note: We support both database protocol load balancing as well as distributing traffic via DNS queries. In the DNS case, the NLB costs will be significantly less, but overall traffic distribution may not be as even across nodes.

- Autoscaling configuration with Target Group

- Scale up and down policies with alarms

- Launch Configuration with instance policy and IAM role

- Security group for proxy EC2 instances

- (Optional) Route53 records to monitor the proxies and provide easy to use hostnames to access the proxies, including with geolocation capability. billable

In the case of the DNS based NLB setup, the template will also configure: * A record pointing to the NLB target hostname * An NS record that points to the A record pointing to the NLB target hostname

It depends on: * Existing VPC & Subnets * An existing Heimdall Central Manager * SSH Key

All resources will be named based on the stack name as appropriate, and their creation can be reversed by deleting the stack.

Heimdall assumes no responsibility for the charges that may be charged by AWS through the use of this template, as in particular, outside of the EC2 instances, the network load balancer may incur charges, as explained "here".

Template Options

For all template options, the following are required:

- ProxyMinimum and ProxyMaximum set to numeric values

- HeimdallProxyVPC configured, and HeimdallProxyAvailablityZones and HeimdallPublicProxySubnetList configured, with all parameters in alignment

- ProxyPublicIpAddress can be set to true if public routing will be used, but for single region deployments, this is typically set to false

- HeimdallProxyAMIID is set to the AMI alias for the Heimdall Enterprise (ARM) edition. Minor editing could be done to use Intel VM's however if need.

- InstanceTypeParameterProxy is set to an appropriately sized instance size

- Keyname for the SSH key to install on the system

- vdbName is configured

- hdHost is configured

- hdAccessKey is configured. This could be the admin user OR the VDB access key. This could also be configured to use AWS Secrets Manager; in that case, specify this parameter in the following format:

secret:<secret name or ARN>. The secret name should match the one configured in the VDB Access/Secret key settings. The proxy will retrieve these values on startup and use them to communicate with the manager. - hdSecretKey is configured. This could be the admin password or the VDB secret key. This parameter should remain empty when AWS Secrets Manager is used in the hdAccessKey parameter.

- ProxyPort is configured.

- ProxyLogGroupName is required (you can use the default) because it defines the CloudWatch log group name needed for the AWS permissions policy for logs and metrics.

Note: The HeimdallProxyAMIId defaults to: /aws/service/marketplace/prod-6k3x2q5j7vg32/latest for the ARM Enterprise Edition. Other valid options are:

- /aws/service/marketplace/prod-upicfbvjlnfqo/latest for the ARM Enterprise Plus Edition

- /aws/service/marketplace/prod-ahm64zezggfhg/latest for the Intel Enterprise Edition

- /aws/service/marketplace/prod-qomddleky3adi/latest for the Intel Standard Edition

Please note that in production, we only support the Enterprise edition options for larger installs with autoscaling.

Single Region NLB Balanced Cluster

The first and simplest configuration that the template supports is one where NLB is used to load balance an autoscaling group of proxies, and the load balancer name is used to access the proxies in a single region. In the template parameters, to configure this, the following are needed:

LBType=nlb

The following options should be blanked to avoid unnecessary route53 configurations for this simple setup:

HostedZoneID, DNSZoneName, dbEndpointName

Multiple Region NLB Balanced Cluster

Like single region, but enable the route53 configuration items of "HostedZoneID" and "DNSZoneName", and deploy the CF template in multiple regions. The primary access hostname will be generated based on the vdb name, and all stacks should have a unique name, to ensure there are no conflicts in global resources like IAM roles.

Single OR Multi-Region DNS Balanced Cluster

This setup is designed to leverage DNS to balance traffic to proxy nodes vs. NLB. NLB is however still used to direct DNS queries to the proxies themselves, but due to the low volume of DNS queries vs. database traffic, this should result in a very low bill for the NLB. For this configuration to be effective, the following should be noted:

- There should be a large number of application servers, or a high amount of thrashing of clients accessing the database/proxy. This is to ensure that caching of DNS results doesn't result in unbalanced traffic load on the proxies;

- If possible, the application servers should be configured to periodically close and open new connections, AND to re-query DNS periodically. This is very similar to guidance on using RDS to access a read-only URL to access multiple reader nodes.

- A public NLB will be created on a public IP to allow access to DNS and HTTP queries. The HTTP queries will be coming from Route53 health check nodes, and will simply be querying for the URL /status, which is exposed by the proxies for health monitoring. This NLB needs to be public for these functions to work.

To use this configuration:

- In the Heimdall VDB configuration, under the advanced options, the auto-scale mode checkbox should be checked

- DNS port set to 54 (53 may work in some environments, but not all so should be avoided)

- Health Check Port should be set to 80, along with a reasonably low health check interval (5 is suggested)

- The Proxy Redirect Name parameter in Advanced Options should be configured to either Proxy Private IP or AWS Public IP. If using a multi-region AWS configuration, unless all private IP's are configured across VPCs to be routable, then use the AWS Public IP

- If a global RDS deployment is in place, make sure to check the "Use Response Metrics" option in the data source LB section to preferentially route to the closest database nodes for reads

- Deploy the template in all regions proxies are desired

In the template, make sure the following are set correctly:

LBType=dns HostedZoneID and DNSZoneName are configured

Optional Variations

If Route53 configurations are used, then the dbEndpointName can be configured to provide a "failover" name to be used if the proxy environment fails. This applies to both NLB and DNS configurations.

If a customer has two endpoints configured for their database, one for reads and one for writes, then you can use the SecondaryProxyPort to expose a second port on the proxy to provide the second endpoint.

If Heimdall proxy peer autodiscovery is desired (when using Hazelcast), then the autodiscoveryKey and autodiscoveryValue can be set. When using the cloudformation template, the aws tag key should be "Name" and the value should be set to the name of the proxies, generally the cloud formation name+"-proxy".

AWS IAM Permissions for the Proxy Instance

The CloudFormation template includes a default inline IAM policy to configure the permissions for the proxy instance. This policy is structured around five key permission sets:

- AllowExportHeimdallCertificates: Allows the proxy to use exportable AWS Certificate Manager (ACM) certificates. By default, this is restricted to certificates tagged with

App: heimdall. - AllowWriteProxyLogs: Grants permission to write logs to Amazon CloudWatch. This is limited to the proxy's specific log group resource

${ProxyLogGroupName}. - AllowPutHeimdallProxyMetrics: Allows the proxy to publish metrics to Amazon CloudWatch. This is restricted to the specific CloudWatch namespace

${ProxyLogGroupName}-proxy. - AllowDescribeEC2Instances: Permits the proxy to retrieve its own public IP address. This action is used by the autoscaling feature and is not limited to a specific resource.

- AllowReadHeimdallSecrets: Allows the proxy to read secrets from AWS Secrets Manager. By default, access is limited to secrets with the

heimdall/resource prefix.

Note: By default, the AWS partition, region, and account ID are automatically fetched from the proxy instance's metadata and credentials.

Policy Customization

The template includes an inline policy by default, which we provide as a least-privilege starting point. You have two main options for customization:

- Modify the Default: You can modify the inline policy directly within the CloudFormation template to fit your specific needs.

- Use a Managed Policy: You can create your own IAM managed policy (examples are in the

environment/awsdirectory) and specify it in the CloudFormation template parameters. This will attach your policy instead of the default inline one.